Artificial Intelligence infrastructure for your entire lifecycle

Why do you need AI infrastructure?

/ 01

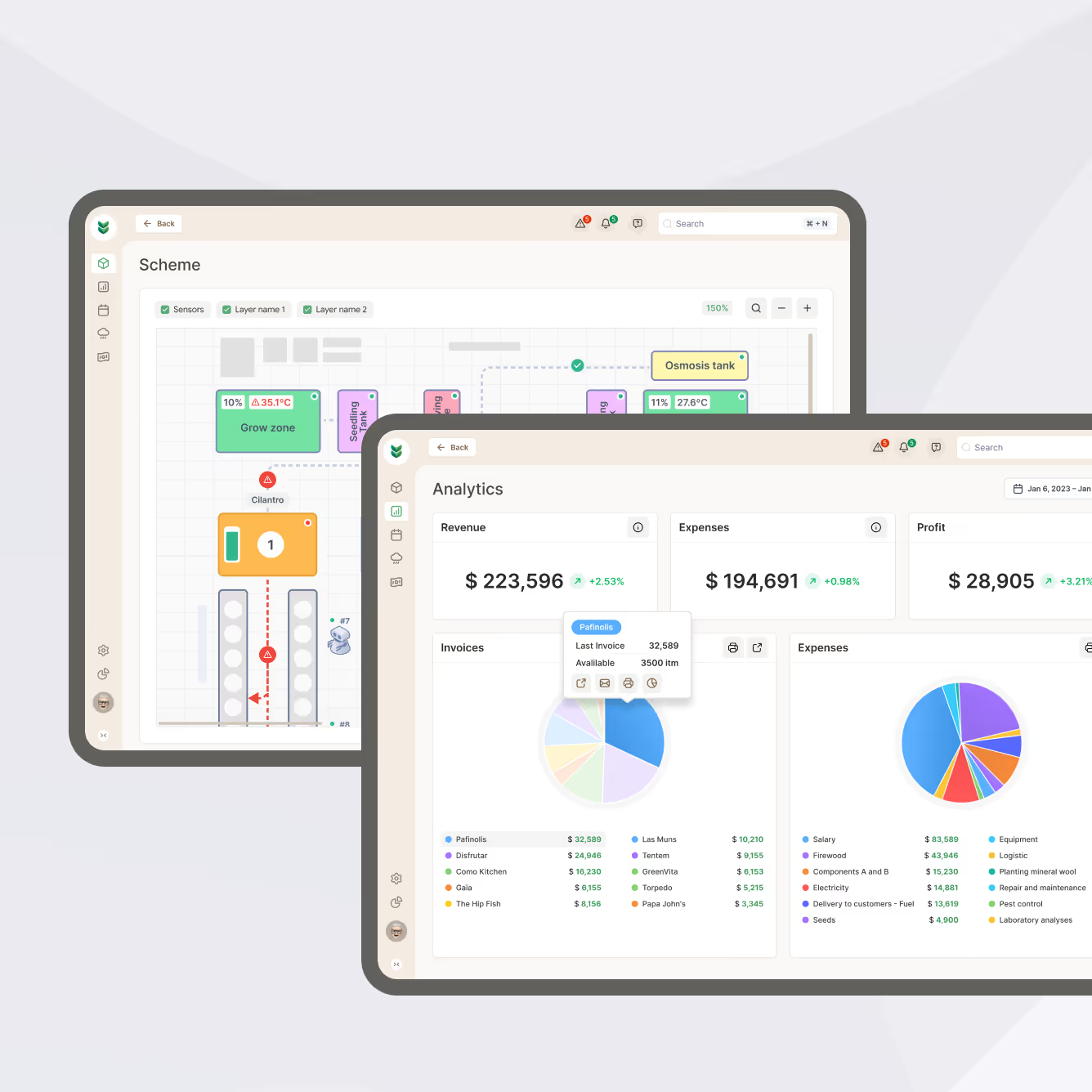

AI experimentation

Track different versions of AI models and parameters such as accuracy, precision, recall, and inference latency. Compare performance metrics across experiments and implement dataset version control.

/ 02

Data engineering & AI pipelines

Automate data ingestion, transformation, and feature engineering through streamlined AI data pipelines. Ensure data quality and support scalable AI systems, providing a solid foundation for analytics and model training.

/ 03

Automated training workflows

Optimize models using hyperparameter tuning and scale training across multiple GPUs/TPUs. Leverage distributed model training and automated retraining pipelines for AI model adaptation to evolving data patterns.

/ 04

Model performance monitoring

Detect concept drift, data drift, and performance degradation with ongoing AI model monitoring. Ensure reproducibility with version control and implement rollback mechanisms for flawed models.

Why hire AI infrastructure engineers at COAX

Complete AI technology stack

- Python - EasyOCR

- PyTorch - FAISS

- TensorFlow - OpenAI

- Scikit-learn - Apache Spark

- Hugging Face - AWS Athena

- AWS Glue24/7 AI model monitoring & support

- Docker - Prometheus

- Kubernetes - Grafana

- Terraform - AWS CloudWatch

- Pulumi - OnfidoIntegration with your existing infrastructure

We provide AI stack support, optimizing and adapting your existing technologies for fast and reliable integration with the AI infrastructure ecosystem.

Data security & compliance

We ensure compliance with GDPR and HIPAA requirements via enterprise-grade encryption, access controls, audit logging, and documentation maintenance for all data processing activities.

What our clients say

Our process

Assessment & planning

We evaluate your requirements and analyze data quality to design the most effective strategy for AI/ML infrastructure implementation.

Infrastructure setup

We create an environment for your AI infrastructure ecosystem, configuring all necessary components and integrations.

AI pipeline development

Our AI infrastructure engineers build AI data pipeline with automated workflows for data processing, model training, quality assurance, and deployment.

Continuous optimization

We continuously analyze system performance, identify bottlenecks, and make improvements to keep your AI infrastructure efficient and cost-effective.

Frequently asked questions and answers

AI infrastructure requires specialized hardware for model training (like GPUs), data lake architectures, and tools for experiment tracking and model deployment. Traditional IT infrastructure focuses on general computing, storage, and networking needs.

While DevOps handles general software deployment, MLOps focuses on machine learning systems, adding model management, experiment tracking, and automated retraining capabilities.

MLOps pipelines add specialized stages for data validation, model training, and model monitoring. Unlike traditional CI/CD, they include steps for experiment tracking, model registry management, and automated retraining when performance drops. The pipeline must handle both code and data versioning, making it more complex than standard software deployments.

Companies that are scaling their AI initiatives beyond simple experiments — typically mid to large enterprises in sectors like finance, healthcare, manufacturing, and retail deploy multiple ML models in production.

They provide you with comprehensive MLOps consulting services, help build scalable ML systems, implement model monitoring, and establish automated pipelines for training and deployment. This includes setting up data validation, model registries, and monitoring frameworks.

They design and maintain the systems that power ML operations, including compute resources, data pipeline machine learning architectures, and deployment platforms.

Our teams track prediction accuracy, data drift, and system health through automated AI maintenance and monitoring systems that alert when models need retraining or when issues arise.

An AI model maintenance tool continuously monitors model performance metrics like prediction accuracy, data drift, and inference speed. When these metrics decline below set thresholds, automated alerts notify the team. The system runs diagnostic checks to identify the root cause. Finally, it triggers appropriate maintenance actions.

Through automated failover mechanisms, regular model performance monitoring, and clear procedures for model retraining and rollback when performance degrades. Regular testing and validation of both models and infrastructure components is also a must.

Want to know more?

Check our blog

What I’ll do next?

1

Contact you within 24 hours

2

Clarify your expectations, business objectives, and project requirements

3

Develop and accept a proposal

4

After that, we can start our partnership